Replacing A Proxmox Virtual Environment Server in a Ceph cluster

The Situation

Imagine a situation in which you have a cluster of Proxmox VE nodes as part of a hpyerconverged Ceph installation. You want to replace one of your nodes with a fully brand new installation of Proxmox VE. You don't intend to migrate its drives, you want to replace the node outright with new drives and a new installation of Proxmox VE. In order for this to work, there are a handful of things you need to consider to prevent 1. unnecessary Ceph rebalances 2. broken HA in Proxmox VE. The broken HA issue comes from the fact that Proxmox VE uses SSH internally to move data around and to run commands on remote nodes. These keys get messed up because Proxmox does not remove the keys when decommissioning a node.

The Strategy

Migrate all virtual machines from the node. Ensure that you have made copies of any local data or backups that you want to keep. In addition, make sure to remove any scheduled replication jobs to the node to be removed.

Replacing a Proxmox Virtual Environment Server in hyperconverged Ceph configuration.

This guide assumes a four node cluster with hostnames node1-node4. We're assuming a replacement of node2, and for convenience, we're migrating to node1, but it could be to any other node in the cluster. In this guide, we are specifically replacing node2 with a new installation of PVE on an upgraded server that shares the same IP(s) and hostname, not migrating an existing installation to a new chassis.

Confirm cluster status (prerequisite, optional).

From node2's CLI, run pvecm nodes.

node2# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 node1

2 1 node2 (local)

3 1 node3

4 1 node4

Confirm that the node you intend to decommission is listed has the "(local)" identifier next to its name. This confirms we're on the right machine.

Migrate VM, CT, templates, storage off of node2.

Using HA, migrate running VM's from node2 to node1.

Manually migrate all remaining nonrunning VMs, CTs, and templates off of node2 to node1.

Decommission node from CEPH.

- Set OSDs on node2 to "out" and wait for rebalance.

- Following rebalance, stop and destroy all OSDs on node2.

- In the same screen of the PVE gui, select each out OSD and press stop, and once stopped, under the "more" submenu, select "destroy".

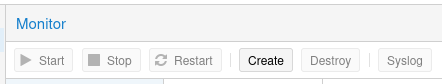

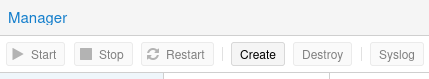

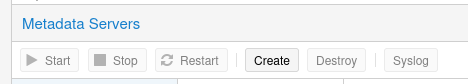

- Remove the Ceph servers (monitor, manager, and metadata) from node2.

- On node2's CLI, clean up the Ceph CRUSH map and remove the host bucket using "ceph osd crush remove node2".

- From node1's CLI, run "pvecm delnode node2"

Confirm node deleted.

From node1's CLI, run pvecm nodes.

node2# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 node1 (local)

2 1 node3

3 1 node4

Clean up SSH Keys.

There is a major missing detail in the official documentation which is that if you intend to join a node into a cluster with the same hostname and IP the previous node had, everything will break if you don't take some prerequisite actions.

Power on the new node2 preconfigured with the same hostname and IP address.

From node1, SSH into the new node2. This will generate an error from the SSH client which will contain a line containing a command that will remove the key from the known hosts file. Use this command syntax to clear these keys:

ssh-keygen -f '/etc/ssh/ssh_known_hosts' -R 'XXX.XXX.XXX.XXX' ssh-keygen -f '/etc/ssh/ssh_known_hosts' -R 'node2.domain.com'

Now attempt to SSH into node2 again. You will get a nearly identical error, only this time the path to the known_hosts file will be in the root user's .ssh directory as so:

ssh-keygen -f '/root/.ssh/ssh_known_hosts' -R 'XXX.XXX.XXX.XXX' ssh-keygen -f '/root/.ssh/ssh_known_hosts' -R 'node2.domain.com'

| This second series of commands, pointing to /root/, are not replicated across the cluster members, and must be done on all members of the cluster manually. |

After executing these commands, when we join the node to the cluster, SSH will work correctly.

Join node to cluster.

- Power on server and configure LOM such as Dell DRAC to use the correct IP address.

- Edit /etc/hostname and /etc/hosts to confirm hostname is correctly matched to previous install's hostname.

- Reboot and verify hostname and IP are correct.

- If the previous machine had a Proxmox license, apply it now.

- Validate network connectivty on corosync network and both the Ceph frontend (consumption and management) and backend (replication) networks to all other nodes.

- Join the Proxmox cluster.

- Install Ceph.

- Add Ceph monitor and Ceph manager to this node.

- Migrate a test VM to the new node to confirm consumption.

- If there are any other maintenance tasks to complete (like swapping another node with the previous node's hardware) do NOT add OSDs back to node2 until ready.